Dimensionalizing Forecast Value

Clarity, Leverage, and Efficiency drive the value of information

tl;dr

In quantitative finance, the value of forecasting is obvious. No decisions are made without forecasts, and every forecast is created for some decision.

On Metaculus1, the signal is just as sharp, but the market for the signal is fuzzier. The gap isn’t quality: it’s that the value proposition is implicit.

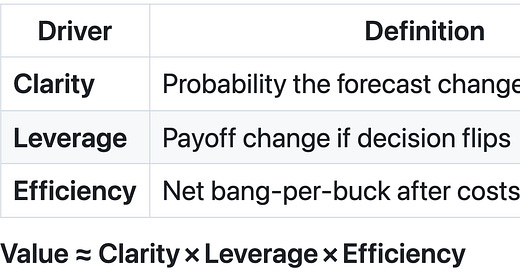

This post is my dimensionalization of forecast value: an identification of the unique factors that determine the importance of answering a question. These factors are Clarity, Leverage, and Efficiency (CLE).

I use CLE to decide when an answer will be valuable, before I spend time asking or answering the question.

To find value, I focus on questions where:

I might change my mind (Clarity)

A lot is at stake (Leverage)

Effort compounds over time (Efficiency)

Decisions Create Questions

Forecasts are useful for making decisions under uncertainty. This is a specific context. It is not always the relevant context.

Much existing content on forecasting assumes a question worth asking, and focuses on methodology improvements. Predicting the future is an unsolved, important problem; it’s good that smart people are working on these improvements.

But suppose I can get a decent forecast answer to any question about the future. What questions should I actually try to answer?

I don’t need a forecast if:

I am not making a decision

I already know what choice I want to make

It doesn’t matter what I decide

Even in a decision-making context, forecasts are also less helpful when:

I can’t observe the outcome of my decision

I can’t trust the forecaster

I have no time to decide

Forecasts are more helpful when:

The event repeats

Mistakes are highly visible

Catastrophic outcomes are possible

The decision matters

I don’t know what to do

How do I take this mess of factors and figure out what questions are worth answering?

Dimensionalizing Forecast Value: CLE

This section is a proposed framework for prioritizing forecasts.

There are many sources of uncertainty, and many decisions to make. How do I know what questions I want answered?

I will focus on three sources of value from forecasts:

Clarity: Forecasting can help me make a decision when I don’t know what to do.

Leverage: Forecasting can help me make the best decision when it matters most.

Efficiency: Forecasting now can help me make even better decisions next time.

These are the core forecasting value drivers2. They are intended to be roughly orthogonal, and roughly measurable3. This enables prioritization of forecasts based on the value they could create if I had them.

Clarity

Forecasting is only useful when I don't already know what to do. The more uncertain I am about what to do, the more I need Clarity. The more obvious a forecast made my decision, the more that forecast provided Clarity.

On the contrary, if I won’t change my mind in response to new information, then new information (e.g. a forecast) has little value. If I don’t change my mind after seeing a forecast, it didn’t provide much value.

Valuable forecasts provide Clarity.

What drives Clarity?

I want to know when an answer will provide Clarity before I spend time asking the question. I dimensionalize Clarity as follows:

Credibility: How trustworthy and accurate is the forecaster?

Random forecaster = no shift

Proven forecaster = strong shift

Timing: Will the forecast arrive around when I need to decide?

Stale intel is dead intel

Just-in-time intel might move the needle

Incentives: Am I motivated to incorporate any forecast?

Bonuses tied to accuracy pull decisions toward the forecast

Office politics can push decisions away from the forecast

Coupling: Does the forecasted variable map cleanly to an action?

“If >70 % chance of hitting 10k MAU, allocate FTE” = tight

“Next Green Party presidential nominee” = loose

Ambivalence: How unsure am I of what to do next?

Confident = locked-in, no shift

Unsure = on-the-fence, potential shift

Stronger Clarity drivers make a forecast likely to change my mind.

How to measure Clarity?

Quick Score: Tag each driver ↑/→/↓ and eyeball the blend; a quick heat‑map is often enough.

Bayes Shortcut: if I know my prior uncertainty (σ) and forecast noise (σᶠ) and assume normality (bold), the likelihood of crossing a decision boundary is:

\(p_\text{cross} = \Phi\!\bigl(-\tfrac{|\mu_0|}{\sqrt{\sigma^2+\sigma^{\!f\,2}}}\bigr)\)

Either way: it is possible to quantify how likely it is that I will change my mind in response to a forecast before knowing what the forecast is.

Low Clarity: “What would I even do with this information?”

If I see a question that seems cryptic, I know it is low in Clarity.

Will the IMF label cryptocurrency a “systemic risk” before 2027?

Will the EU adopt the term “AI sovereignty” in binding law before 2027?

Will India publish a GDP base‑year revision before 2026?

High Clarity: “A good answer would make my decision for me.”

If I know what I would do given an answer to a question, I know it is high in Clarity.

What will AAPL return over the next week?

Is this text from “Coinbase” a phishing attempt?

Will my latest pull request need to be revised due to a bug?

Leverage

Forecasting is useful when making the right decision is critical. The more valuable it is to make the right choice, the more a forecast could provide Leverage. The more I benefit from a forecast inducing the right choice, the more it has provided Leverage.

On the contrary, even a razor‑sharp forecast is meh if the stakes are pocket change or reversible.

Valuable forecasts provide Leverage.

What drives Leverage?

Again, I want to know when an answer will provide Leverage before I spend time asking the question. I dimensionalize Leverage as follows:

Payoff Reversibility: Can I change my decision after implementation?

Rollbacks mean lower leverage

One-way streets spike leverage

Payoff Size: How large is the potential swing from wrong choice to right choice?

Trivial outcome swings mean trivial leverage

Existential risk means existential leverage

Payoff Visibility: How clear is the link between my action and the outcome?

Uncredited wins and blame-free losses mute leverage

Reputation-ruining and career risk magnify leverage

Stronger Leverage drivers make it important to (rely on forecasts to) decide correctly, because the cost of being wrong is huge.

How to measure Leverage?

Quick Score: Tag each driver ↑/→/↓ and eyeball the blend; a quick heat‑map is often enough.

Utility Delta: if willing to do some scenario analysis, take the max difference between best-case potential choice and current choice. Roughly:

\(\Delta U \approx max(p_\text{flip} \times |\text{potential choice} - \text{current choice}|)\)

You can review the EVSI literature for a formal treatment.

Either way: it is possible to quantify how important changing my mind in response to a forecast could be, before knowing what the forecast is.

Low Leverage: “Why do I care about knowing this?”

If I see a question that seems pointless, I know it is low in Leverage.

Will Slack add a new color palette in Q3?

Will the 2030 Summer Olympics opening ceremony last > 180 minutes?

High Leverage: “If I could know this, my whole life would change.”

If I know I need to get the answer right, I know it is high in Leverage.

Will I be diagnosed with ___ in the next decade?

Will the public market cap of the “AI application layer” be > $50B by 2028?

Will this house be appraised for more than the current price in 5 years?

Efficiency

Forecasting is most valuable when work done once continues to pay off. If the insights, data, or tooling generated today will power many future decisions, then the Efficiency leg of the stool is sturdy. When the same effort is a one‑off sunk cost, Efficiency collapses, even if Clarity and Leverage look great on paper.

What drives Efficiency?

Again, I want to know when an answer will provide Efficiency before I spend time asking the question. I dimensionalize Efficiency as follows:

Learning Spillover: How much value can be created from reusing the work?

A reusable feature store, automated dashboard, or generalizable model amplifies future work.

Bespoke analysis that dies in a slide deck does not.

Acquisition Cost: What does it take to get the answer?

Cheap APIs, “good‑enough” LLM calls, or existing telemetry keep costs low

Custom surveys, specialized lab tests, or hiring a research team drive costs up.

In short: high spillover, low acquisition cost = high Efficiency.

How to measure Efficiency?

Efficiency compares future indirect benefits to current direct costs.

Quick Score: Rate Spillover ↑/→/↓ and Cost ↑/→/↓; a quick heat‑map is often enough.

Rough ROI: Estimate

If the ratio is ≫ 1, the question “pays rent.” If ≪ 1, skip or defer.

Low Efficiency: “Will this even cover its own bill?”

If the answer will be expensive to get with limited reuse potential, I know the question is low in Efficiency.

Commissioning a single‑use private poll to settle one PR headline

Spinning up a bespoke data‑science team to estimate a non‑recurring KPI

Training a custom LLM fine‑tuned on niche logs for a one‑off launch

High Efficiency: “This work amortizes fast.”

If getting the answer produces a process or artifact that can be cheaply reused, I know the question is high in Efficiency.

Automating daily sales‑funnel forecasts that feed every go‑to‑market meeting

Wiring an anomaly‑detection script that pages on any infra budget overrun, month after month

Standardizing a survey instrument whose results benchmark each new product concept

Efficiency in Context

Even a crystal‑clear, high‑leverage forecast can be a net loss if it takes a platoon of analysts and a seven‑figure data purchase to run once. Conversely, a medium‑Clarity, medium‑Leverage signal can be a no‑brainer when it drops out of telemetry I already collect.

Value Is A Product

Why multiply?

Missing one driver means value ≈ 0

Higher value from one driver increases the importance of the other drivers

But there are plenty of other reasonable modeling choices once I have the inputs.

Summary

CLE is a very general approach for assessing the value of answering a question.

Clarity: Answer when new information can change a decision.

Leverage: Answer when the decision significantly impacts expected value.

Efficiency: Answer when effort compounds faster than cost.

I am actively using this framework to surface valuable questions in my work. I hope it helps you!

I am an advisor to Metaculus, but all views here are my own.

This is not the only possible model of forecast value. All models are wrong, but some are useful. This one is useful to me, and I hope it will be useful to you.

I say “roughly” because there are many choices about how to bucket factors into Clarity/Leverage/Efficiency, and many choices for quantification.